Lattice Math

Lattices are a simple but powerful mathematic concept that can greatly simplify programming distributed systems.

Lattices For Dummies

So, what is a lattice? Lattices are conceptually very simple, so let's explain them without too much mathy language. A lattice is some type of thing that has a very special merge function. The merge function combines two things and produces an output thing. The core feature of lattices is that the merge function has some special properties: associativity, commutativity, and idempotence (abbreviated ACI).

Lets start with a simple example of something which turns out to not be a lattice: numbers and addition. Numbers are a type of thing, and addition is a function that takes two input numbers and produces an output number! But does it satisfy the ACI properties?

Let's start with commutativity. A function is commutative if it doesn't matter if you swap its two inputs:

Addition is commutative, so that property is satisfied! (In contrast, subtraction is not commutative).

Next associativity. A function is associative if, when there are multiple calls together, it does not matter in what order you evaluate the calls:

Addition satisfies associativity! (Again, subtraction is not associative -- if you subtract a subtraction then you're doing addition!)

Finally, idempotence. A function is idempotent if when you give it the same value twice it returns that same value.

Addition is NOT idempotent, because is , not just . This works for , but not for all numbers.

Now lets look at something that is a lattice. Numbers and the max function. It is

commutiative; the max of is the same as the max of . It is associative; the max of

will always be the same no mater what order you look at them in. And, unlike addition,

it is idempotent; the max of and is always just .

The most standard, canonical example of a lattice is sets with the union function. It is commutative and associative; it doesn't matter what order you union sets together, the result will always be the same. And it is idempotent; a set unioned with itself is the same set.

Lattice Partial Order

When learning about lattices you'll hear about some sort of "partial order" relating to them. What is a partial order? It's like a normal order, where you can say " comes before ", but it is partial because sometimes say " and are incomparable" instead. As it turns out, the lattice merge function actually creates a partial order on the elements of the lattice. Specifically, we say that whenever you merge two things, the thing you get out is always bigger than each of the inputs (or it might be equal to one or both of them).

So for the number-max lattice, tells us that and .

Ok duh. It turns out that the partial order created by the max merge function is naturally just

numerical order (i.e. ). Additionally, it is a total order, meaning all pairs of items are

comparable. But things get a bit more interesting with other lattices.

|

A visualization of the max total order over positive integers. |

Let's consider the set-union lattice. As it turns out, the partial order created by the union () function matches subset order, .

So, both of these subsets, and are before . Or equivalently, is larger.

But what about the ordering between and ? It turns out these two sets are incomparable under the set-union order. Neither is a subset of the other, so neither can come first. Equivalently, going back to the merge function, there is no thing which you could merge (union) into one of the sets in order to get the other set out.

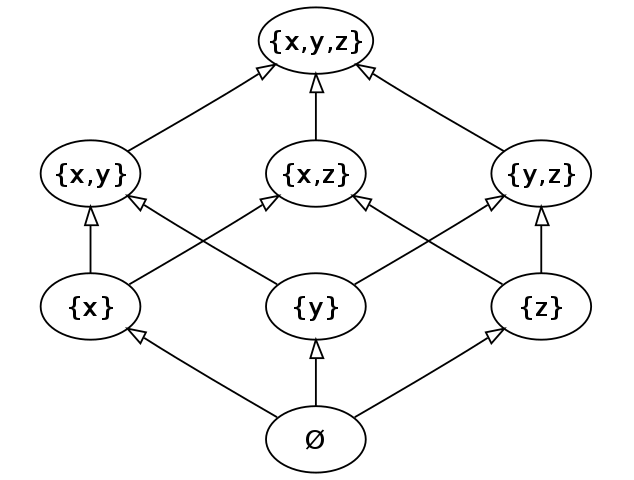

So above, we visualized the number-max lattice partial order as a graph, albeit a very flat, linear, number-line graph. We can visualize our set-union partial order as a (much more interesting) graph as well: (this is called a Hasse diagram)

|

| A visualization of the set-union partial order over three elements, . By KSmrq |

The directed paths represent things getting larger. In the diagram, is less (smaller) than , represented as a path upwards from the former to the later. In contrast, there is no path between and for example, so they are incomparable.

The merge function is also called the least upper bound (LUB). This name comes from the partial order interpretation. When merging two elements, the result is the smallest (least) item that is still greater than both elements. Hence least upper bound.

Reference

This section is a quick reference for people already comfortable with abstract algebra basics.

Lattices

A join-semilattice with domain with and join (or "merge") function has the following properties:

(Separately, meet-semilattices and join-semilattices are equivalent structures)

The join function creates a partial order :

Read as " preceedes ", or " dominates ".

The smallest element in a lattice domain , if it exists, is the bottom, :

The largest element in a lattice domain , if it exists, is the top, :

The CALM Theorem and Monotonicity

The CALM Theorem (Consistency As Logical Monotonicity) tells us: "a program has a consistent, coordination-free distributed implementation if and only if it is monotonic"

A function is monotonic if it preserves a partial ordering of its domain to a (possibly different) partial ordering of its codomain.

Lattice Morphism

A function from lattice domain to lattice codomain is a morphism if it structurally preserves merges, i.e. merges distribute across the function. For all :

(Because both the domain and codomain are semilattice spaces, semilattice homomorphism is the most precise term for this.)

Lattice morphisms are a special kind of monotonic function which are differentially computable. Because merge distributes over a morphism, we can evaluate the morphisms on a small "delta" of data and merge that delta into the existing result rather than recompute the entire morphism on all data.

Lattice Bimorphism

A function is a lattice bimorphism if it acts like a lattice morphism separately in both of its arguments. For all and :

Lattice bimorphisms are differentially computable in both arguments, in the same way morphisms are.